Thursday, December 31, 2009

To Generate Account Activity Monthwise

isnull(sum(case when OM.month= 1 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as JulGross,

isnull(sum(case when OM.month= 1 and tp.Salesordernoitem not like '[%xzcd%]' then Quantity else 0 end),0) as JulPieces,

isnull(sum(case when OM.month= 2 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as AugGross,

isnull(sum(case when OM.month= 2 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as AugPieces,

isnull(sum(case when OM.month= 3 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as SepGross,

isnull(sum(case when OM.month= 3 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as SepPieces,

isnull(sum(case when OM.month= 4 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as OctGross,

isnull(sum(case when OM.month= 4 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as OctPieces,

isnull(sum(case when OM.month= 5 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as NovGross,

isnull(sum(case when OM.month= 5 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as NovPieces,

isnull(sum(case when OM.month= 6 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as DecGross,

isnull(sum(case when OM.month= 6 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as DecPieces,

isnull(sum(case when OM.month= 7 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as JanGross,

isnull(sum(case when OM.month= 7 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as JanPieces,

isnull(sum(case when OM.month= 8 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as FebGross,

isnull(sum(case when OM.month= 8 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as FebPieces,

isnull(sum(case when OM.month= 9 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as MarGross,

isnull(sum(case when OM.month= 9 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as MarPieces,

isnull(sum(case when OM.month= 10 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as AprGross,

isnull(sum(case when OM.month= 10 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as AprPieces,

isnull(sum(case when OM.month= 11 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as MayGross,

isnull(sum(case when OM.month= 11 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as MayPieces,

isnull(sum(case when OM.month= 12 and tp.Salesordernoitem not like '[%xzcd%]' then DPGrossBookingPriceInEuro else 0 end),0) as JunGross,

isnull(sum(case when OM.month= 12 and tp.Salesordernoitem not like '[%xzcd%]' then quantity else 0 end),0) as JunPieces

from vtgeneral tp

left outer join tblOpenMonth as OM on datediff(day,tp.bookdate,OpenDt) <=0

and datediff(day,tp.bookdate,CloseDt) >= 0

and bookdate >= '2008-07-01' and bookdate <= '2009-06-30'

group by name

Tuesday, December 29, 2009

Date function - remove saturday and sunday

--Finding the number of days between the job status date and today's date

datediff(day,fs.statusDate,getdate())

--Finding the number of weeks between the job status date and today's date

datediff(ww,fs.statusDate,getdate())

--Identifying sunday and removing from the no of days calculation

- (datediff(ww,fs.statusDate,getdate() + case when datename(dw,fs.statusDate) = 'sunday' then 1 else 0 end))

--Identifying saturday and removing from the no of days calculation

- (datediff(ww,fs.statusDate,getdate() + case when datename(dw,getdate()) = 'saturday' then 1 else 0 end))

--Identifying holidays based on the business and removing from the no of days calculation

--table tblholiday contains the dates where the business is on holidays

- (datediff(ww,fs.statusDate,getdate() + case when getdate() not in (select date from tblholiday where finyr = dbo.ufgetfinyr(getdate())) then 1 else 0 end))

Saturday, December 26, 2009

SQL 2005 Editions and Installation

1. SQL Server Standard Edition (32 and 64 bit) ---- For medium business and to handle large databases.

2. SQL Server Enterprise Edition (32 and 64 bit) ---- For large business and to handle very large databases.

3. SQL Server Express Edition (32 bit only) --- This is a free version of sql server easy to use for small database

4. SQL Server Workgroup (32 bit only) --- For small business and small database has no limits on size and number of sizes.

5. SQL Server Developer Edition (32 and 64 bit) --- This has functionality of the Enterprise version but used only for development and testing.

Tip: To install sql server 2005 standard edition 64 bit we have used windows server 2003 (service pack 1) standard x64 edition. Different sql server 2005 64 bit require different editions and service packs of the windos operating systems.

Monday, December 21, 2009

SQL Temp tables, using temp tables

select cm.customerid, cm.name, cm.Phone,

cm.Fax,cm.Email,cm.Contact,category as category,0 as x,rm.repid into #temp1

from customermaster cm left outer join repsmaster rm on cm.repid = rm.repid

where customerid not in

(select customerid from vtgeneral where bookdate >= getdate() - 730

and bookdate <= getdate() - 365 and customerid is not null) and category = 'Direct'

--using the above table and creating another output

select cm.customerid, cm.name, cm.Phone, cm.Fax,cm.Email,cm.Contact,cm.category as repcategory, sum(isnull(DPGrossBookingPriceInEuro,0)) as x from #temp1 cm inner join vtgeneral vtg on cm.customerid = vtg.customerid where bookdate is not null and (bookdate >= getdate() - 1065

and bookdate <= getdate() - 730 ) --and cm.category = 'Direct' group by cm.customerid, cm.name, cm.Phone, cm.Fax,cm.Email,cm.Contact ,cm.category

union

select cm.customerid, cm.name, cm.Phone, cm.Fax,cm.Email,cm.Contact,cm.category as repcategory, 0 as x from #temp1 cm where cm.customerid not in (select customerid from vtgeneral where bookdate is not null and (bookdate >= getdate() - 1065

and bookdate <= getdate() - 730 ) and customerid is not null)

group by cm.customerid, cm.name, cm.Phone,

cm.Fax,cm.Email,cm.Contact,cm.category

drop table #temp1

Thursday, December 17, 2009

SQL Cursors

But having said it is not recomended from performance point of view. Here is the script in case you would like to use where we cannot avoid.

Declare hello Cursor LOCAL For

Select id from table

Open hello

Fetch Next From hello into @Id

While (@@Fetch_Status=0)

begin

-- here you can write the query to loop through id and put in your update conditions

Fetch Next From hello into @Id

end

Close hello

DeAllocate hello

Wednesday, December 16, 2009

SQL Shrink Database

1. Use the query in my post to find out the maimum table size and see if you have some error_history records which can be deleted or moved to archive.

2. The second step is to shrink the database.

3. This can be done by shrinking the mdf file.

use databasename

exec sp_helpfile

dbcc shrinkfile (databasename_Data)

4. The database can also be shrinked using the SSMS, by clicking the database --- task --- shrink ---- database.

5. The database can also be shrinked using the SSMS, by clicking the database --- task --- shrink ---- files, where you can shrink the log file or the database file.

To maintain the log file, the log has to be backed up and it can be shrunk using the below script

BACKUP LOG [databasename] TO DISK = N'Z:\DATABASE\TRANSACTION LOG BACKUPS\globalconac.trn' WITH NOFORMAT, INIT, NAME = N'databasename-Transaction Log Backup', SKIP, NOREWIND, NOUNLOAD, STATS = 10go

DBCC SHRINKFILE (N' log file name' , 786)

go

Monday, December 14, 2009

SQL - Moving Tempdb to another drive

1. The size of the tempdb grows and if there is no space this will cause the applciations to slow down eventually to a halt.

2. The space on the other hard disk could give a better performance than the existing one as a result of more space.

The following code can be used to move the tempdb from the existing drive to e:\

USE TempDB

GO

EXEC sp_helpfile

GO

USE master

GO

ALTER DATABASE TempDB MODIFY FILE

(NAME = tempdev, FILENAME = 'e:\sqldata\datatempdb.mdf')

GO

ALTER DATABASE TempDB MODIFY FILE

(NAME = templog, FILENAME = 'e:\sqldata\datatemplog.ldf')

GO

Also please note the sql server service has to be stopped and restarted to have this new tempdb location to work.

SQL Optimization

1. Table should have primary key

2. Table should have minimum of one clustered index

3. Table should have appropriate amount of non-clustered index

4. Non-clustered index should be created on columns of table based on query which is running

5. Do not to use Views or replace views with original source table

6. Triggers should not be used if possible, incorporate the logic of trigger in stored procedure

7. Remove any adhoc queries and use Stored Procedure instead

8. Check if there is atleast 30% HHD is empty – it improves the performance a bit

9. If possible move the logic of UDF to SP as well

10. Remove * from SELECT and use columns which are only necessary in code

11. Remove any unnecessary joins from table

12. If there is cursor used in query, see if there is any other way to avoid the usage of this (either by SELECT … INTO or INSERT … INTO, etc)

SQL Backup using SQL Management Plans

Sunday, December 13, 2009

sql indexing, optimization

1. clustering index and 2. non- clustering index.

Clustering index is referred to like book shelf where the files in the shelf are refered to as pages, records in the files are referred to as the rows in the table, the shelf drawers on its own by alphabetical order is referred to as intermediate level.

Non clustered index is referred to as the index at the back of the book, where in a book when looked for a particular topic you can see references of certain pages based on that topic, in similar way non clustered index references the pages of similar key.

The next step will be to how effectivily shall we use the indexing. The main two functions of a indexing is to provide uniqueness and to return results much faster.

The next step is choosing between cluster and non-cluster index. We can have only one cluster index per table, where we can have 249 non - cluster index on a table. So in most cases we have the primary key set as the cluster index and work on the selectivity to determine the need of the number of non-cluster indexes.

Now how to determine and set up indexes.

For example if you are looking for a word "customer" in a book then a index will help you to find all the pages where the customer is available.

For example if you are looking to search all the words in a book then it is better to read the ent book which is table scan in our sql query, so in this case a index will be of no beneficial only burden.

There are a few ways to determine the need for a index, sql profiler is one of our best tools to start with, to check where there is more time spent.

Then based on the time, when the tables are identified this query can be used to determine the selectivity ratio.

selectivity

select count(distinct salesordernoitem) as '# unique',

count(*) as '# rows',

str(count(distinct salesordernoitem) / cast (count(*)as real),4,2) as

'selectivity' from transactionpricing

if the results are as below

#unique #rows selectivity

75000 75000 1.00

in this case an index on the sales order number in the transaction pricing table will be appropriate.

Monday, December 7, 2009

SQL SSRS Linked Reports

Using the Report Manager --- Click the base report where the linked report to be created.

Then click the properites tab

Click on the Linked report.

You can provided the name of the linked report.

The location where the linked report is saved can be changed if required.

On the side you can see the parameters, datasources, execution, security and history.

- Remember for the linked report there is no separate report definition file (.rdl file).

- Also the parameters can have a different list of selected parameters from the base report but not a different SET of parameters list from the base report.

- As the rdl file is the same the changes performed on the base report implies to the linked report, so this could be a advantage in some scenarios and disadvantage in some cases, it is upto the user to put in use.

Sunday, December 6, 2009

SQL SSRS ad hoc report generation

Go to the report manager page.

Click on the datasource.

General a report model.

Also the user can generate the ad hoc reports provided he has the option given to generate reports and save them in the Myreports folder.

This will allow the users to generate ad hoc reports from their own web browsers.

Saturday, December 5, 2009

SQL SSAS Dimension relationships

Regular - Is used when relationship of a dimension is linked directly to a measure group through the attribute.

Reference - Is used when relationship of a dimension is linked directly to a measure group through a intermediate dimension.

Many-to-Many - Is used when relationship of a dimension is linked through a measure group through an intermediate measure group.

Fact - Is used when relationship of a dimension is linked through a fact table to define the measure group.

If anyone can elaborate further on this, you are welcome.

Friday, December 4, 2009

SQL SSAS Backup

The following steps to BACKUP the ssas cubes.

Connect to the analysis servvices. Right click the cube to be backed up.

Click on Backup. Uncheck File compression --- this is used compress the backup file at the expense of performance.Uncheck the encrytion --- this can be checked if the backup file need to be pasword protected in that case a password has to be provided.

check the file overwrite option based on your requirement

Click Browse in the selected path enter the folder where the backup file to written.

Now click on the script and script action to new query window

This will write a query to query window. Copy this and open a new job in the sql agent.

Scheduling the backup using sql agent

Click New job

provide a name for the job , select step, select type as SQL SERVICES ANALYSIS COMMAND, Enter the server , past the script below

SQL SSRS Report performance

To execute a report, when the request is sent though HTTP, the rdl is called based on the paramters passed and the dataset queries based on the query, if the query has to return a large number of rows, there is a possibility of time out error or locking.

To avoid this cache can be used, i.e., if the same paramters are used by the user report can be generated from the Report server tempdb , and report server renders from the stored intermediate format. Please not the cache will not work if the report credentials are saved in the report server.

Snapshots : Cache reports do not preserve the previous versions of the report generation, i..e, history in that case snap shots come in place , you can store prevous versions in the report history and this can stored by a schedule, by specifiing the number of reports can be stored.

This can be setup in the http://localhots/reports site settings --- select default settings for the history ---

please note keeping unlimited number of snapshots in history make create disk space issue.

Enjoy developing ssrs reports.

SQL SSRS item level and system level roles

site settings --- Configure item level role definitions for item level

and Configure system level role definitions for system level

The item level role definitions are :

- Consume reports

- Create linked reports

- Manage all subscriptions

- Manage individual subscriptions

- Manage folders

- Mange reports

- Manage models

- Manage resources

- Set security for individual items

- View data sources

- View models

- View folders

- View reports

- View resources

The system level role definitions includes

- Execute report definitions

- Generate Events

- Manage jobs

- Manage report server properties

- Manage roles

- Manage shared shedules

- Manage report server security

- View report server

- View shard schedules

Friday, November 27, 2009

Security of a package - SSIS

How can you achieve this.

In the package click on properties --- protection level

The following options are available for selection

- Do Not Save Sensitive will remove sensitive data every time the package is reopened.

- The Encrypt Sensitive With User Key protection level encrypts the sensitive information in the package by using keys based on the current user. When a new user opens the package, he or she must reenter sensitive data, such as a SQL Server password.

- Encrypt Sensitive With Password will encrypt sensitive data with a package password. This would require you to enter a password every time you opened the package.

- Encrypt All With User Key will encrypt the entire package with the user key. A new user will not be able to view or execute the package.

Data Transfer slow - SSIS package

In the destination task --- oledb destination task , the OLE DB Destination, when connected to SQL Server, enables data to be inserted with bulk insert statements by using Fast Load, click on the olebd destination properties --- click on access mode --- select fast load

Tuesday, November 24, 2009

Error: 18456, Severity: 14, State: 16

This error is caused based on the user password is not correct from the client system. This message appears in the log file in this location C:\Program Files\Microsoft SQL Server\MSSQL.1\MSSQL\LOG

This error also appears on the event viewer.

Error: 18456, Severity: 14, State: 16

Login failed for user 'sa'. [CLIENT: 192.168.x.xxx]

If this message appears continously check with client system if there is any sql job running and trying to attempt to connect to the server and password is not set right.

In our case we had a diagnostic tool running from this client and the password was changed, this has caused this error message

Wednesday, November 18, 2009

SQL SSRS Report optimization

Filtering the dataset enables you to use a cached report that reuses the same data.

Filtering the dataset means that SQL Server Reporting Services will query all the data and later filter the information. The advantage of filtering the dataset is that other reports can use the same data when using cache reports, increasing their performance. However, if the report is not configured as cached, filtering the dataset will likely be slower than using parameters.

Sql Connection

For the above please check the following.

Option 1

1. Log into sql server , go to start --- control panel ---administrative tools --- services

2. Check sql server and make sure it is running.

3. Check for sql browsing services and make sure it is running.

Option 2

1. go to start --- programs --- administrator tools --- computer management

2. Click on services and applications

3. Click on sql server 2005 network configurations

4. Click on protocols for MSSQL Server

5. Click on TCP/IP

5. click on IPAddresses tab , check for the ports to be 1433

Option 3

1. check the main firewall , please make sure you contact your network admin when you do this.

2. Under the change settings

3. Go to the exception tab

4. add port

5. Name : SQLPort Number: 1433Protocol: Select TCP

We had the same issue again on 20th July 2012 where Brian had a condition to allow the ports, this was removed by Brian in the main firewall and it worked.

Friday, November 13, 2009

SQL Server Profiler results

1. The integer data displays the numerical information associated with the events.

2. The text data column shows the text description of the event such as MDX statement

3. The EventSubclass column shows the event subclass, such as the ExecuteSQL, WriteData, BuildIndex, or other subclass.

4. The duration displays the event duration.

Thursday, November 12, 2009

SQL Server Names Instance installation

I have created a second instance in my sql server as MSSQL2, after the installation I tried to connect using the SSMS sql server management studio, but to my surprise i was given a error to check if the sql server exists or user details are correct.

The first instance connected without issues. But the second instance MSSQL2 will not connect.

I did check my authentication details, username and password.

I did check the sql surface area configureation details to see the MSSQL2 is online.

I did check the services to see if the MSQL2 is online.

But the issue was the sql server browser service was NOT online. When I brought is online i was able to connect without issues.

Wednesday, November 11, 2009

SQL error handling,begin Tran,Commit , rollback, try and catch

s--sql statment

IF @@ERROR <> 0

BEGIN

ROLLBACK TRAN

END

COMMIT TRAN

GO

SQL DB Mail

EXEC msdb.dbo.sp_send_dbmail @recipients = 'vijay@xxx.ie',

@copy_recipients = 'vijay@xxx.ie',

@subject = 'LMK GP Integration Failure - Receivables',

@body = 'LMK GP Integration Failure - Receivables';

Monday, November 9, 2009

SQL SSAS High Availability

The following are the major advantages of doing so.

1. SSAS Solution will stay online even the operting system is corrupted.

2. SSAS Solution will stay online even when there is a hardware failure.

Thursday, November 5, 2009

SQL Database Backup

--******************************************************************

--schedule this for nightly run

--****************************************************************************

--back up database

BACKUP DATABASE [Database] TO DISK = N'Z:\DATABASE\Database_backup.bak' WITH NOFORMAT, INIT, NAME = N'ManufacturingChina_backup', SKIP, REWIND, NOUNLOAD, STATS = 10

GO

--backup up transaction log

BACKUP LOG [Database] TO DISK = N'Z:\DATABASE\TRANSACTION LOG BACKUPS\Database.trn' WITH NOFORMAT, INIT, NAME = N'Database-Transaction Log Backup', SKIP, NOREWIND, NOUNLOAD, STATS = 10

go

-- shrink log file

DBCC SHRINKFILE (N'Database_Log' , 786)

GO

--******************************************************************

--schedule this for hourly run

--****************************************************************************

--backup log hourly

BACKUP LOG [Database] TO DISK = N'Z:\DATABASE\TRANSACTION LOG BACKUPS\Database.trn' WITH NOFORMAT, NOINIT, NAME = N'Database-Transaction Log Backup', SKIP, NOREWIND, NOUNLOAD, STATS = 10

GO

-- backup log hourly

DBCC SHRINKFILE (N'Database_Log' , 786)

go

Wednesday, November 4, 2009

SQL Data Mining - Processing

Process Structure --- loads the data to the structure without affect the mining model.

UnProcess --- removes the data from structure and the mining models

Process Full --- process both the mining and the structure.

Tuesday, November 3, 2009

Data Mining

Microsoft has put forth algorithims offered in sql server 2005. the models are

i. decision trees

ii. naive baiyes

iii clustering

iv sequence clustering

v time series

vi association

vii neutral network

Decision Tree : Lets take example of a decision to identify couples who are likely to form a successful marriage. The input attributes will include age, religion, gender, political views, height and so on. the predictable attribute will be marrigage outcome. The first split say is based on the political views 70-30, and the second split is based on the height. In the 70% split, the second split say is height split as 60 are 6' tall and 40 are less than 5' 8" and on the 30 % split, the second split say is 58% are 6' tall and 18% are so less than 5' and 8", this makes to predict the chances of having successful marriage are sharing 70% common political views and men who are 6' tall.

Naive Bayes: This is model can be used to predict a income range for the given occupation.

Clustering: This model can be used to predict where a graph showing per capital income vs per capital dept for each country. This helps to read where there is huge number of countries, by clustering them together it is easy to read.

Time series: This model can be used to predict based on the given time. For time series algorithim you need key time, input and predictable attributes.

In my next article let us go through a step by step process of creating a data mining project.

Monday, November 2, 2009

Get Accperiod / Financial period

For example 01st July 2009 will 01/2009 and 01st Nov 2009 will be 05/2009

ALTER Function [dbo].[GetAccperiod](@transdate as datetime)

returns varchar(10)

as

begin

declare @AccPeriod varchar(10)

declare @Month smallint

declare @Year int

--declare @tod datetime

--select @tod=cast(@transdate as datetime)

begin

--Get Current Month

select @TransDate = convert(datetime,convert(varchar,@TransDate,101))

select @Month = month(Closedt),@Year = Year(CloseDt) from tblOpenMonth where opendt <= @TransDate and CloseDt >= @TransDate

-- Select @Year = year(@transdate)

if (@Month < 7)

begin

Select @Year = @Year -1

end

if (@Month >=7)

begin

Select @Month =@Month -6

--Append zero if month returns a single digit value

if @Month < 10

Select @AccPeriod='0' + cast((@Month) as varchar(2))

else

Select @AccPeriod=cast((@Month) as varchar(2))

end

else

begin

Select @Month = @Month + 6

--Append zero if month returns a single digit value

if @Month < 10

Select @AccPeriod='0' + cast((@Month) as varchar(2))

else

Select @AccPeriod=cast((@Month) as varchar(2))

end

Select @AccPeriod = @AccPeriod + '/' + cast(@Year as varchar(4))

return (@AccPeriod)

end

End

Sunday, November 1, 2009

Removing the witness server

The advantages includes when the principal server fails, the mirror automatically takes over, please as soon as the sql service in the principal server fails.

The disadvantage if you want to restart the principal server even at that point the mirror server takes over, then to bring this back again, we need to login to the mirror server and click on the failover.

To remove the witness server from database mirroring after it has been configured with witness server adopt the following steps.

Please note before doing this, this needs to planned off the working times, also there should be complete backups and log backups done before this.

1. Stop the mirroring on the principal server.

2. Take a log back up.

3. Then configure again only with principal and mirror server.

Thursday, October 29, 2009

Dimension processing olap --- Processing options

The process update option is a more light weight processing option, this allow to process and updates, inserts and deletes the data, the cube need to have to be processed.

The process default option does not detect data changes.

Process Add detects member additions only.

The process full option requires to process the cube as well.

creating Index, rebuilding index

drop index idx_repid on tblrepsmaster

--create or rebuild the index, it is advised to rebuild once a month

Create unique index idx_repid on dbo.tblrepsmaster(repid)

Monday, October 26, 2009

Execute Packages with DTExecUI and DTExec

The DTExecUI can be called from command prompt and this will bring up the windows interface to run the ssis package.

The DTExec can be executed from the dos command prompt. The syntax for this will be

DTExec /f mypackage /set \package.variables[user::packagepath].value;"c:\ssis"

Maximum error count property - Control flow

For example in a sequence container of for loop container the maximum error count can be set.

Report Builder Model

1. Relational report models

2. ssas report models

relational report models are best created using the bids(business intelligence development studio).

ssas report models are best created using reporting services and ssms, they cannot be created using bids.

creating a report model is a three step process.

using the bids 1. create the data source 2. create the data source view and 3. create the report model.

creating a report ssas report model, using the reporting services, login to http://localhost/reports , select the datasource folder, create a datasource, click on the generate modle button, click on the change location of the model path, click ok to create the model.

Thursday, October 22, 2009

High Availability - Data

Our company has certain data which requires high availability so we use the database mirroring along with witness ( debatable) to make sure we can switch over with no data loss.

Having said that, the challenge here is to maintain not only the database but also the ssis packages hosted on the server, ssrs reports on the server.

Since this information is stored on msdb, it becomes very critical as we cannot replicate the msdb database as far as I know, as it will have the reference to the original msdb database.

So we had planned to replicate the entire scenario of ssis packages and ssrs reports on to the mirror server.

The next challege here is the principal server has certain data integrations to another sql server. These integrations have to be replicated but the server reference has to be changed if linked servers are used.

The next challenge we had is to do database mirroring between different locations for eg. US and europe, we achieved this by creating a common domain for the sql servers and it worked like charm. Now the speed could be a issue will keep posted based on the performance.

The level1 gives the comfort of high availability of data with automatic failover even in case of a server failure.

The following may be expensive but it worth based on the data requirement.

Level 2

Level 3

The data is stored external to the building.

Fail over --- Role Switching

Fail over --- Role Switching - Database Mirroring

1. Automatic failover using a witness server.

2. Manual failover

- This can be done in the sql server management studio

- Click the database --- properties ---mirroring --- click failover

3. Forced Failover

Use this query for the forced failover

ALTER DATABASE

Thursday, October 8, 2009

Copy date with Heading from Query analyzer

In the sql server managment studio --- click Query --- Query options ---- Under Results ---click Grid --- check the box under "Include column headers when copying the results"

Replace String Function

--in this example the quartor was spelt wrong so we use this query to update

quarter_name --- is the field name

quartor -- is the characters to be replaced

quarter -- is the one to be replace with

update tblbsbcalendar set quarter_name = replace(quarter_name,'Quartor','Quarter')

Thursday, October 1, 2009

SQL Server service pack version for sql 2005

Release Sqlservr.exe

RTM 2005.90.1399

SQL Server 2005 Service Pack 1 2005.90.2047

SQL Server 2005 Service Pack 2 2005.90.3042

SQL Server 2005 Service Pack 3 2005.90.4035

SQL SSRS Subscriptions and schedule

select * from dbo.Subscriptions

-- ssrs schedule list from msdb database

select * from dbo.Schedule

SQL DTS Jobs and schedules - Maintenance

select * from dbo.sysjobs

--to identify the sql jobs schedules in a server, this is run under msdb

select * from dbo.sysjobschedules

--to identify the sql jobs history in a server, this is run under msdb

select * from dbo.sysjobhistory

--this gives the complete schedules listed under the sql agent jobs

select * from dbo.sysschedules

Shrink SQL Transaction Log file ldf

option 1

--To back the transaction log file with no truncate optin and shrink the file this is not

--recommended because there could be a data loss, this can be avoided if can take a backup

--immediately after the shrink is completed. I have the code posted in my blog.

option 2

check the database properties --- options --- if the recovery is set to simple

and the Autoshrink to True.

We had a scenario where the ldf file has grown to 600 GB, we had the nightly and transaction back up set, but since the recovery was set to full and autoshrink was false this has increased to 600 GB.

With the setting of option2 this worked and brought back the log file to 1 GB.

Wednesday, September 30, 2009

Shrink Transaction Log File

BACKUP LOG [Manufacturing] TO DISK = N'Z:\DATABASE\TRANSACTION LOG BACKUPS\Manufacturing.trn' WITH NOFORMAT, NOINIT, NAME = N'Manufacturing-Transaction Log Backup', SKIP, NOREWIND, NOUNLOAD, STATS = 10

GO

------------------------------------------------------------------

--NOINT --- append to the backup file

--INIT -- overwrite to the backup file

-------------------------------------------------------------------

NOFORMAT --- preserver the existing header format

FORMAT --- writes a new header format

---------------------------------------------------------------------

NOSKIP --check the expiration date of all backup sets

SKIP -- disable the checking

---------------------------------------------------------------------

NOREWIND --- without the tape rewind

REWIND -- with tape rewind

----------------------------------------------------------------------

DBCC SHRINKFILE (N'Manufacturing_Log' , 786)

Shrink Log File

USE DatabaseName

Go

DBCC SHRINKFILE(

BACKUP LOG

DBCC SHRINKFILE(

Go

--truncates the log by discarding all but the active log

--suggested to take a backup of the database immediatelty after this

String Functions

replace(invoiceno, 'p','')

Monday, September 21, 2009

Indexing

Create unique index idx_lotno on db.transdetails(lotno)

Friday, September 18, 2009

msdb backup

You cannot restore a backup of a system database (master, model, or msdb) on a server build that is different from the build on which the backup was originally performed. An attempt to perform such a restore causes the following error message to occur:

It is possible that the database schema for the system databases changed across server builds. In order to ensure that this change in schema does not cause any inconsistencies, one of the first checks that the RESTORE command performs is a comparison of the server build number on the backup file and the build number of the server on which the user is trying to perform the restore.

If the two builds are different, the error message occurs and the restore operation terminates abnormally. Note Installing a service pack or a hotfix build changes the server build number, and server builds are always incremental.Here are a few scenarios in which this problem may occur:

A user attempts to restore a system database on Server A from a backup taken on Server B. Servers A and B are on different server builds. For example, Server A might be on a RTM build and Server B might be on a service pack 1 (SP1) build.

A user attempts to restore a system database from a backup taken on the same server. However, the server was running a different build when the backup occurred. That is, the server was upgraded since the backup was performed.

Thursday, September 17, 2009

Case statements

select case when month(getdate()) = '1' then 'jan'

when month(getdate()) = '2' then 'feb'

when month(getdate()) = '3' then 'mar'

when month(getdate()) = '4' then 'apr'

when month(getdate()) = '5' then 'may'

when month(getdate()) = '6' then 'jun'

when month(getdate()) = '7' then 'jul'

when month(getdate()) = '8' then 'aug'

when month(getdate()) = '9' then 'sep'

when month(getdate()) = '10' then 'oct'

when month(getdate()) = '11' then 'nov'

when month(getdate()) = '12' then 'dec'

end

Date Functions- get week, month, day of week

select datepart(week,getdate())

--get day of week of given date as integer

select datepart(dw,getdate())

--get month of given date as integer

select datepart(month,getdate())

--converting month to varchar from calendar

select case when month(getdate()) = '1' then 'jan'

when month(getdate()) = '2' then 'feb'

when month(getdate()) = '3' then 'mar'

when month(getdate()) = '4' then 'apr'

when month(getdate()) = '5' then 'may'

when month(getdate()) = '6' then 'jun'

when month(getdate()) = '7' then 'jul'

when month(getdate()) = '8' then 'aug'

when month(getdate()) = '9' then 'sep'

when month(getdate()) = '10' then 'oct'

when month(getdate()) = '11' then 'nov'

when month(getdate()) = '12' then 'dec'

end

Get FinYear

ALTER FUNCTION [dbo].[ufGetFinYr]

(

@CurDate datetime

)

RETURNS varchar(9) AS

BEGIN

Declare @CurrentMonth tinyint,@FinYear varchar(9)

select @CurrentMonth = month(@CurDate)

if @CurrentMonth >=1 and @CurrentMonth <=6 select @FinYear = convert(varchar,Year(@CurDate)-1) + '-' + convert(varchar,Year(@CurDate)) else select @FinYear = convert(varchar,Year(@CurDate)) + '-' + convert(varchar,Year(@CurDate)+1) return @FinYear END

--to query this

select dbo.ufgetfinyr(getdate())

Wednesday, September 16, 2009

Duplicate records in a table

select salesordernoitem from tbltransdetails group by salesordernoitem

having count(salesordernoitem) > 1

Search a string in all stored procedures

-- To search string "repid" through all stored procedures this limits to 4000 characters

SELECT ROUTINE_NAME, ROUTINE_DEFINITION

FROM INFORMATION_SCHEMA.ROUTINES

WHERE ROUTINE_DEFINITION LIKE '%repid%'

AND ROUTINE_TYPE='PROCEDURE'

-- To search string "repid" through all stored procedures

SELECT

sys.objects.name,

sys.objects.type_desc,

sys.sql_modules.definition

FROM

sys.sql_modules inner join sys.objects

on sys.sql_modules.object_id = sys.objects.object_id

WHERE

sys.sql_modules.definition LIKE '%tblShipToMaster%'

Find all Table size in SQL 2005

--Cursor to get the name of all user tables from the sysobjects listing

DECLARE tableCursor CURSOR

FOR

select [name]

from dbo.sysobjects

where OBJECTPROPERTY(id, N'IsUserTable') = 1

FOR READ ONLY

--A procedure level temp table to store the results

CREATE TABLE #TempTable

(

tableName varchar(100),

numberofRows varchar(100),

reservedSize varchar(50),

dataSize varchar(50),

indexSize varchar(50),

unusedSize varchar(50)

)

--Open the cursor

OPEN tableCursor

--Get the first table name from the cursor

FETCH NEXT FROM tableCursor INTO @TableName

--Loop until the cursor was not able to fetch

WHILE (@@Fetch_Status >= 0)

BEGIN

--Dump the results of the sp_spaceused query to the temp table

INSERT #TempTable

EXEC sp_spaceused @TableName

--Get the next table name

FETCH NEXT FROM tableCursor INTO @TableName

END

--Get rid of the cursor

CLOSE tableCursor

DEALLOCATE tableCursor

--Select all records so we can use the reults

SELECT * into tbltables_size

FROM #TempTable

--Final cleanup!

DROP TABLE #TempTable

GO

SELECT * from tbltables_size ORDER BY CAST(LEFT(dataSize,LEN(dataSize)-3) AS NUMERIC(18,0)) DESC

Tuesday, September 15, 2009

System Requirements for Database Mirroring

· We need the principal and mirror server. Witness is a option in our case we can keep it as manual changeover.

· Operation can be synchronous (obviously adds some latency cost to complete transaction across two servers --- advantages high availability and high protection)

o or asynchronous( more speed – high performance - without waiting for mirror to write on the log)

· sql server instances should be of same service pack

· verify we have the enough disk space where the mdf and ldf is stored on the both the servers

· the principal database should be on FULL database recovery mode

· please note all transactions are written to transaction log and not truncated

Longest running sql query

--to identify the longest running sql query -- run under the master database

SELECT DISTINCT TOP 10

t.TEXT QueryName,

s.execution_count AS ExecutionCount,

s.max_elapsed_time AS MaxElapsedTime,

ISNULL(s.total_elapsed_time / s.execution_count, 0) AS AvgElapsedTime,

s.creation_time AS LogCreatedOn,

ISNULL(s.execution_count / DATEDIFF(s, s.creation_time, GETDATE()), 0) AS FrequencyPerSec

FROM sys.dm_exec_query_stats s

CROSS APPLY sys.dm_exec_sql_text( s.sql_handle ) t

ORDER BY

s.max_elapsed_time DESC

GO

Thursday, September 10, 2009

SQL SERVER – TempDB is Full. Move TempDB from one drive to another drive.

Source: MSSQLSERVER

Event ID: 17052

Description: The LOG FILE FOR DATABASE 'tempdb' IS FULL.

Back up the TRANSACTION LOG FOR the DATABASE TO free

up SOME LOG SPACE

Make sure that TempDB is set to autogrow and do not set a maximum size for TempDB. If the current drive is too full to allow autogrow events, then arrange a bigger drive, or add files to TempDB on another device (using ALTER DATABASE as described below and allow those files to autogrow.

Move TempDB from one drive to another drive. There are major two reasons why TempDB needs to move from one drive to other drive.

1) TempDB grows big and the existing drive does not have enough space.

2) Moving TempDB to another file group which is on different physical drive helps to improve database disk read, as they can be read simultaneously.

Follow direction below exactly to move database and log from one drive (c:) to another drive (d:) and (e:).

Open Query Analyzer and connect to your server. Run this script to get the names of the files used for TempDB.

USE TempDB

GO

EXEC sp_helpfile

GO

Results will be something like:

name fileid filename filegroup size

——- —— ————————————————————– ———- ——-

tempdev 1 C:Program FilesMicrosoft SQL ServerMSSQLdatatempdb.mdf PRIMARY 16000 KB

templog 2 C:Program FilesMicrosoft SQL ServerMSSQLdatatemplog.ldf NULL 1024 KB

along with other information related to the database. The names of the files are usually tempdev and demplog by default. These names will be used in next statement. Run following code, to move mdf and ldf files.

USE master

GO

ALTER DATABASE TempDB MODIFY FILE

(NAME = tempdev, FILENAME = 'd:datatempdb.mdf')

GO

ALTER DATABASE TempDB MODIFY FILE

(NAME = templog, FILENAME = 'e:datatemplog.ldf')

GO

The definition of the TempDB is changed. However, no changes are made to TempDB till SQL Server restarts. Please stop and restart SQL Server and it will create TempDB files in new locations.

System Database

Records all of the system level information for a SQL Server system.

It records all login accounts.

Records the existence of all other databases, including the location of the database files.

Records the initialization information for SQL Server;

Always have a recent backup of master available.

tempdb

tempdb holds all temporary tables and temporary stored procedures.

tempdb is a global resource; the temporary tables and stored procedures for all users connected to the system are stored there.

tempdb is re-created every time SQL Server is started so the system starts with a clean copy of the database. Because temporary tables and stored procedures are dropped automatically on disconnect, and no connections are active when the system is shut down, there is never anything in tempdb to be saved from one session of SQL Server to another.

By default, tempdb autogrows while SQL Server is running.

Unlike other databases, however, it is reset to its initial size each time the database engine is started.

If the size defined for tempdb is small, part of your system processing load may be taken up with autogrowing tempdb to the size needed to support your workload each time to restart SQL Server.

You can avoid this overhead by using ALTER DATABASE to increase the size of tempdb.

model

The model database is used as the template for all databases created on a system.

When a CREATE DATABASE statement is issued, the first part of the database is created by copying in the contents of the model database, then the remainder of the new database is filled with empty pages. Because tempdb is created every time SQL Server is started, the model database must always exist on a SQL Server system.

msdb

This is used by SQL Server Agent for scheduling alerts and jobs, and recording operators

Wednesday, September 9, 2009

Converting varchar to numeric for product size comparison

case when productsize = '' or replace(productsize ,'"','') like '%[^0123456789.]%' then 0

else convert(numeric(18,2),replace(productsize ,'"',''))

end >= 1

Tuesday, September 8, 2009

SQL DB-Mail

2. Go to Management

3. Go to database mail.

4. Right click --- select configure database mail

5. In the account name type in for example: vijay

6. Under email address enter the email id for example: xxxx@xxx.ie

7. Under reply email address enter the email id for example: xxxx@xxx.ie

8. Under sername name specifity the smtp ip address 192.169.0.x.

9. click next and finish

10. Now click on configure database mail and click on manage profile security.

11. Check the profile name and select "Yes" default profile.

Database Backup

Based on the data criticality and availability required the backup's can be designed.

Option -1 --- High availability

1. Have a complete hardware backup where the sql data and operating system are backed up with the appropriate raid configuration.

2. Database mirrored with witness for automatic fail over.

3. Full Database backed up every night to external media (drive or tapes)..

4. Differential transaction Database backed up every 15 min.

Option -2 --- High availability

1. System state back up including the operating system.

2. Database mirrored with witness for automatic fail over.

3. Full Database backed up every night to external media (drive or tapes).

4. Differential transaction Database backed up every 15 min.

5. Please make sure master and msdb database backed up.

SQL Tips

1. Do not use the stored procedure within the DTS jobs.

2. When the server crashed or harddisk crashes, if you do not have msdb backed up the DTS Jobs are lost.

3. Please make sure your master and msdb database are backed up.

4. Also have the procedures written as stored procedures and call them in DTS.

No global profile is configured. Specify a profile name in the @profile_name parameter.

Solution

1. open sql server managemnt studio

2. Go to Management

3. Go to database mail.

4. Right click --- select configure database mail

5. Select Manage profile security

6. Under defaut profile make sure "Yes" is selected

Thursday, September 3, 2009

Templates SSRS Reports

In an attempt to standardise the look and feel of the SSRS Reports we produce, template for a Portrait and Landscape report can be generated.

Wednesday, August 26, 2009

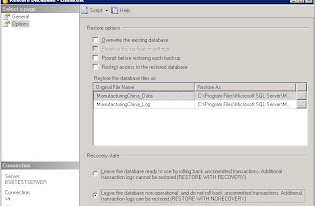

SQL Database Restore

9. Click on the check box for the restore upto what time you would like to restore, the date and time is mentioned if you scroll.

SQL Backup

Database backup and restore can be achived by the following

1. First step is to do the back up

BACKUP DATABASE [ManufacturingChina] TO

DISK = N'Z:\DATABASE\ManufacturingChina_backup.bak' WITH NOFORMAT,

INIT, NAME = N'ManufacturingChina_backup', SKIP, REWIND, NOUNLOAD, STATS = 10

--NO FORMAT - specifies that the media header should not be written on all volumes used for this backup operation. This is the default behavior.

--INIT - Specifies that all backup sets should be overwritten

--SKIP - Disables the checking of backup set expiration and name that is usually performed by the BACKUP statement to prevent overwrites of backup sets.

--NOREWIND -Used only with TAPE devices to improve performance when performing multiple backup operations to a loaded tape

--SQL Server will retain ownership of the tape drive until a BACKUP or RESTORE command is used with either the REWIND or UNLOAD option or the server is shut down.

2. step 2 is to do the log back up with INIT that is to overwrite

BACKUP LOG [ManufacturingChina] TO DISK =

N'Z:\DATABASE\ManufacturingChina\ManufacturingChina.trn'

WITH NOFORMAT, INIT, NAME = N'ManufacturingChina-Transaction Log Backup',

SKIP, NOREWIND, NOUNLOAD, STATS = 10

GO

3. the above two scripts to be on one job scheduled at says 12:00 midnight

4. now we need to do the log back up with NOINIT that is with append

BACKUP LOG [ManufacturingChina] TO DISK = N'Z:\DATABASE\ManufacturingChina\ManufacturingChina.trn' WITH NOFORMAT, NOINIT, NAME = N'ManufacturingChina-Transaction Log Backup', SKIP, NOREWIND, NOUNLOAD, STATS = 10GO

5. Finally to keep the size of the database in tact

DBCC SHRINKFILE (N'ManufacturingChina_Log' , 786)

786 is the default size given in this example, you can provide the size based on the database default log size.

6. Step 4 and 5 can be scheduled on a separate job

Saturday, August 22, 2009

SQL Job failure

This script identifies failure of sql jobs to the connected sql instance.

USE [master]

GO

/****** Object: StoredProcedure [dbo].[up_sqljob_check] Script Date: 08/22/2009 09:22:17 ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

ALTER procedure [dbo].[up_sqljob_check]

as

begin

-- Variable Declarations

DECLARE @PreviousDate datetime

DECLARE @Year VARCHAR(4)

DECLARE @Month VARCHAR(2)

DECLARE @MonthPre VARCHAR(2)

DECLARE @Day VARCHAR(2)

DECLARE @DayPre VARCHAR(2)

DECLARE @FinalDate INT

-- Initialize Variables

SET @PreviousDate = DATEADD(dd, -1, GETDATE()) -- Last 7 days

--select @PreviousDate

SET @Year = DATEPART(yyyy, @PreviousDate)

SELECT @MonthPre = CONVERT(VARCHAR(2), DATEPART(mm, @PreviousDate))

SELECT @Month = RIGHT(CONVERT(VARCHAR, (@MonthPre + 1000000000)),2)

SELECT @DayPre = CONVERT(VARCHAR(2), DATEPART(dd, @PreviousDate))

SELECT @Day = RIGHT(CONVERT(VARCHAR, (@DayPre + 1000000000)),2)

SET @FinalDate = CAST(@Year + @Month + @Day AS INT)

--select @FinalDate

-- Final Logic

/*

SELECT j.[name],

s.step_name,

h.step_id,

h.step_name,

h.run_date,

h.run_time,

h.sql_severity,

h.message,

h.server

FROM msdb.dbo.sysjobhistory h

INNER JOIN msdb.dbo.sysjobs j

ON h.job_id = j.job_id

INNER JOIN msdb.dbo.sysjobsteps s

ON j.job_id = s.job_id

AND h.step_id = s.step_id

WHERE h.run_status = 0 -- Failure

AND h.run_date > @FinalDate

and enabled = '1'

ORDER BY h.instance_id DESC

*/

DECLARE @tableHTML NVARCHAR(MAX) ;

SET @tableHTML = N'

SQL Job Failures on 34 server

' +N'

| Name | '+Message | ' +

|---|

declare @count numeric

SELECT @count = count( distinct j.[name] )

FROM msdb.dbo.sysjobhistory h

INNER JOIN msdb.dbo.sysjobs j

ON h.job_id = j.job_id

INNER JOIN msdb.dbo.sysjobsteps s

ON j.job_id = s.job_id

AND h.step_id = s.step_id

WHERE h.run_status = 0 -- Failure

AND h.run_date > @FinalDate

and enabled = '1'

select @count

if @count > 1

begin

EXEC msdb.dbo.sp_send_dbmail

@recipients = '',

--@copy_recipients = '',

@subject = ' SQL Job failures on 34 server',

@body = @tableHTML,

@body_format = 'HTML' ;

end

end

--select * from MSDB.dbo.sysjobs

OLAP Cube Processing

1. The olap cube and the dimensions has to be prcessed to have the updates.

2. This can be done manually or automatic.

3. Manual process --- to open sql server management studio, connect to analysis services, click on the analysis database dimensions first --- dimensions will be listed on the explorer details window on the side, select all right click and process.

4. Now click the dimension --- right click and process.

5. If there are errors in processing please check you data first, for example primary key validation, datetime format.

Friday, August 21, 2009

Create a olap cube

The following is advised to be known

1. Please make sure the tables to be used are in line with your requirement. for example - sales analyis in a company by customer, by product type, by reps and by jobnumber.

2. Also have the calendar table included to view by year,quater,month and date.

3. To create this table please refer to my earlier post in SSAS.

4. After this is done open the sql server business intelligence studio.

5. Click new project --- business intelligence projects --- analysis project --- under browse select the location where the project is been saved.

6. In the data sources --- create a new datasource, use the windows authentication account. In the data source view --- create a new data source select the tables to be used in the cube.

7. In the cube right click and follow the wizard, click on build the cube using a data source, click next --- select the fact (are measure where the numeric values stored e.g., grossprice, totalnet price ) and dimension tables (used for filter e.g., by product type, by invoicdate) --- clik next

now select the measures to be displayed, click next --- it will required to select atleast one dimension ( note dimensions can be created later) --- click next --- give a name for the cube and finish.

8. Now having created the cube with one dimension additional dimensions can be created, but before, click on the green arrow to process the cube, you might get an error if you have not set the deployment server. Go to menu project --- properties and click on deployment and specify the server name e.g., 192.0.0.111\MSSQ1 do not forge the instance name.

9. Now press F5 or click on the green arrow and process the cube, you should see something similar to image below.

10. Addition dimensions can be added dimension right click --- new dimension --- click next --- build the dimension using a new source -- check on autobuild --- click next --- click on standard dimension or time dimension --- click next --- select the table --- check the field you need to filter --- e.g., by product type.

repeat step 10 for additional dimensions.

Hosting OLAP Reports